Tackling Deepfake Fraud: Strategies for the Banking, Insurance, and Beyond

Deepfake: AI's double-edged sword. Potential benefits in entertainment, education, communication, but poses fraud and misinformation threats in banking, insurance, real estate, online markets, and medicine. Effective countermeasures crucial.

Introduction

In recent years, deepfake technology has emerged as a double-edged sword within the digital realm. On one hand, it showcases the remarkable capabilities of artificial intelligence, offering potential benefits in fields like entertainment, education, and communication. On the other, it presents a growing threat of fraud and misinformation, particularly in sectors such as banking, insurance, real estate, online marketplaces, and medicine. As these industries increasingly rely on digital and visual content, the potential for deepfake exploitation grows, making the development of effective countermeasures crucial.

Understanding Deepfake Technology

Deepfake Basics:

Deepfakes are generated by artificial intelligence and machine learning algorithms known as generative adversarial networks (GANs). These networks train on vast datasets of images and videos to create convincing fake content where one person's likeness can be swapped with another's or manipulated to create entirely fictional scenarios.

Evolution and Accessibility:

Initially requiring substantial technical expertise and resources, deepfake technology has become more accessible due to advancements in AI tools and the proliferation of user-friendly software. This accessibility increases the risk of misuse across various sectors.

The Impact of Deepfakes in Key Industries

Banking:

In banking, deepfakes can facilitate identity theft, unauthorized access to accounts, and misleading communications that could affect stock prices or market movements.

Insurance:

The insurance sector faces threats from deepfakes in the form of fraudulent claims, where fabricated incidents or damages are presented as real to claim payouts.

Online Marketplaces:

For online marketplaces, deepfakes can misrepresent products or sellers, leading to trust issues and potential financial losses for buyers.

Real Estate:

In real estate, deepfakes might be used to create fake property listings or alter images of properties, misleading potential buyers and harming sales integrity.

Medicine:

The medical field could see deepfakes used in manipulating medical imagery or impersonating professionals, which could have dire consequences for diagnoses and patient trust.

Current Mitigation Efforts

Detection Technologies:

Various AI tools have been developed to detect deepfakes by analyzing inconsistencies in images or videos, such as irregular blinking patterns, unnatural lighting shifts, or inconsistent sound.

Regulatory Measures:

Governments and international bodies are beginning to draft and enact legislation that targets the malicious creation and distribution of deepfakes, focusing on consent and the intent behind their use.

Industry Guidelines:

Many sectors are establishing guidelines for content verification to ensure the authenticity of digital media circulated within their operations.

Advanced Technologies to Combat Deepfake Fraud

AI and Machine Learning:

Sophisticated algorithms are being enhanced to not only detect deepfakes but also to trace their origins, helping to identify and prosecute perpetrators.

Biometric Verification:

Banking and insurance sectors are increasingly relying on biometric data for user authentication, making unauthorized access through deepfake identities more difficult.

Blockchain:

Blockchain technology offers a decentralized and tamper-proof ledger system, ideal for maintaining secure records of digital transactions and preventing the manipulation of digital identities.

Regulatory and Legal Framework

Global Regulatory Landscape:

An examination of how different countries are approaching the regulation of deepfakes, with some implementing strict penalties for malicious creation and distribution.

Legal Deterrents:

The enforcement of laws that require explicit consent for the use of personal likenesses and the imposition of heavy fines and penalties for violations.

International Cooperation:

The necessity for cross-border collaborations to address the international nature of digital media and cyber fraud effectively.

Strategic Recommendations for Industry Adaptation

Banking and Insurance:

These sectors should invest in continuous training for staff to recognize and respond to deepfake threats and implement layered security protocols combining AI detection with human oversight.

Online Marketplaces and Real Estate:

Enhanced verification processes for sellers and properties, respectively, along with regular audits of listings to check for any signs of fraudulent activity.

Medicine:

Secure and verify medical imaging data with blockchain technology and enhance professional verification systems to confirm the identities of medical personnel.

Deepfake Audio Detection

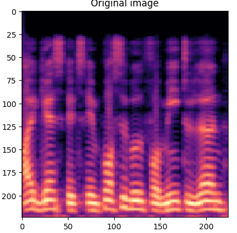

- Deepfake Audio Detection:

This GitHub project focuses on identifying deepfake audio using Generative Adversarial Networks (GANs) and explainable AI techniques. It evaluates the quality of generated audio and provides tools for classification using models like MobileNet and VGG. The project also incorporates explainable AI methods such as SHAP and GradCAM to provide insights into the decision-making process of the models (Deepfake Audio Detection on GitHub).

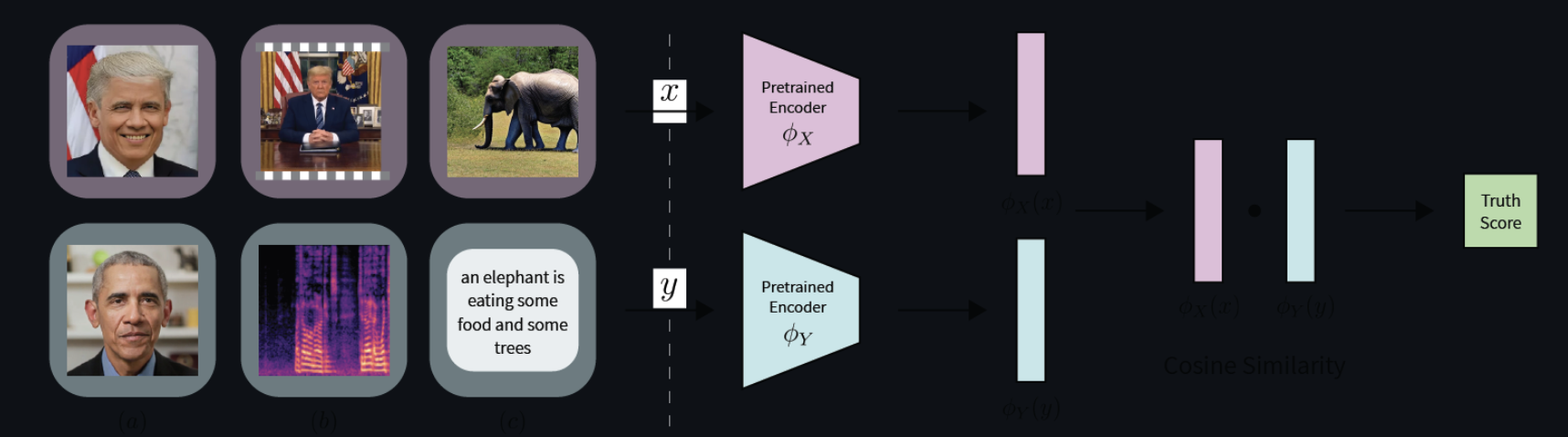

FACTOR:

FACTOR is an innovative tool designed to detect deepfakes by leveraging discrepancies between false facts and their synthesis. It uses a truth score, computed via cosine similarity, to differentiate between real and fake media, enabling the detection of zero-day deepfake attacks. This tool is particularly useful in scenarios where traditional detection methods might fail (FACTOR on GitHub).

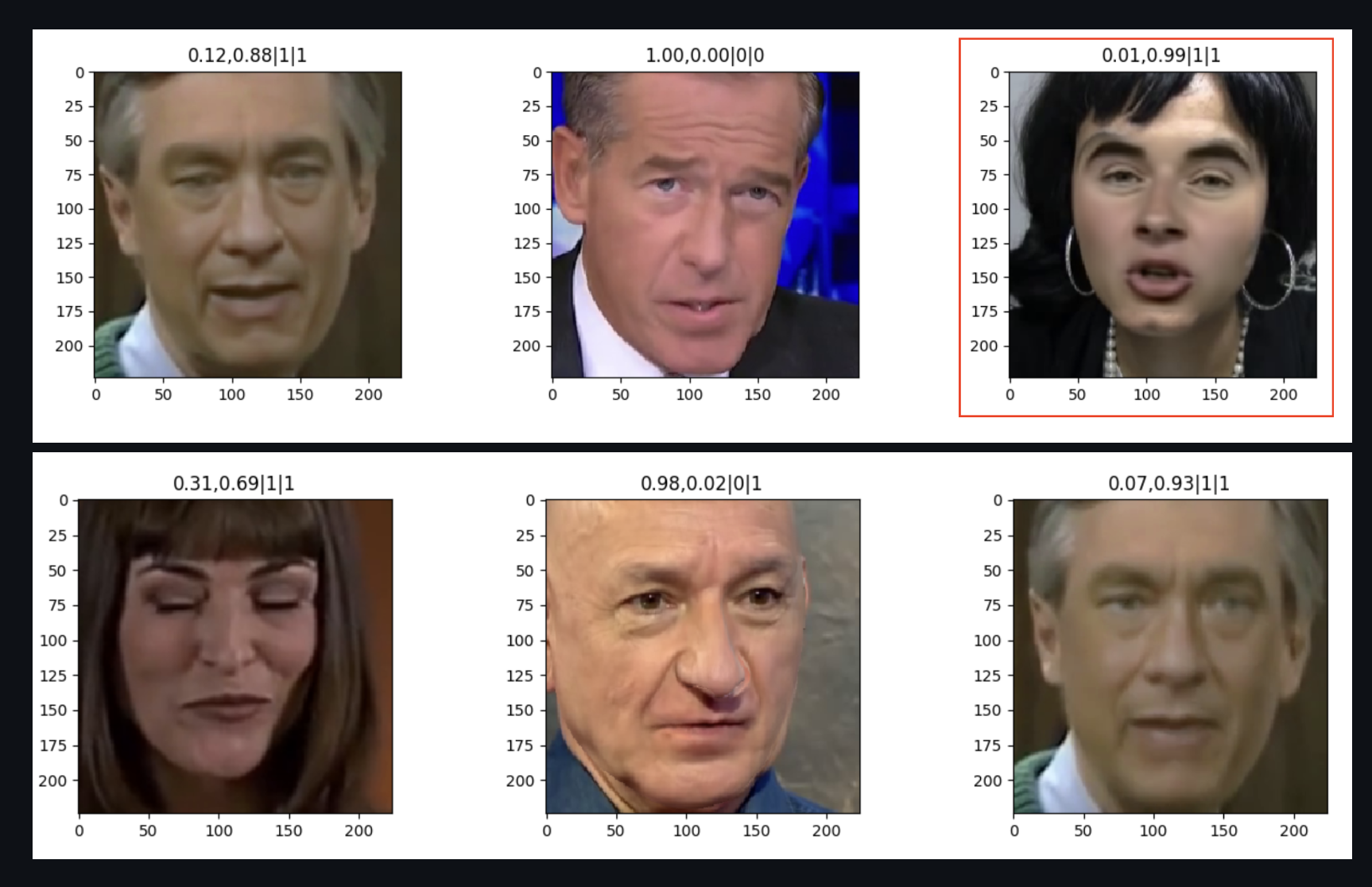

Dessa's DeepFake Detection:

This project by Dessa provides tools for deepfake video detection that are designed to work with real-world data. It uses a PyTorch model based on ResNet18, fine-tuned to detect deepfakes more effectively. The repository includes detailed setup instructions and scripts for managing and reformatting datasets, making it a practical tool for developers looking to implement deepfake detection systems (Dessa's DeepFake Detection on GitHub).

These tools represent a cross-section of the current state-of-the-art in deepfake detection, covering audio, video, and image modalities. Each tool provides unique features and methods for tackling the complex challenges posed by deepfake technology, ensuring that industries such as banking and insurance can better protect themselves from this form of digital fraud.

Conclusion

As deepfake technology continues to evolve, so too must the strategies to combat its misuse. Across industries, the focus should be on developing robust detection technologies, enforcing stringent regulations, and educating both professionals and the public about the risks and signs of deepfake fraud. By adopting a proactive and comprehensive approach, industries can safeguard themselves against the threats posed by this potent technology and maintain the trust that is fundamental to their operations.